The Price We Pay For Efficiency – The Slippery Slope Of AI (10 min read)

New Substack 🚨 https://thethoughtdistillery.substack.com

Living in a world that is constantly evolving and becoming more and more complex, there has always been a push towards efficiency. And not just in one aspect of life but across the board, whether it’s a new company that is automating basic processes to become more streamlined, or in your personal life where you use an AI assistant to answer your pressing questions. These could be answers to the most mundane questions like “how long does rice last out of the fridge?”, or more existential questions like “is free will an illusion?”.

Don’t get me wrong – having the ability to find the answer to anything under the sun is amazing and utilising AI for logistical problems can free up your time so you can fully focus on more creative pursuits. However, the issue comes when we rely on it too much, to solve problems to things we never even thought were problems in the first place. It is at this slippery crossroads where instant access to information is a click away, that we begin to become averse to thinking critically for ourselves and eventually delegate our greatest gift, rationality, over to the hands of a computer.

In this article, I will attempt to avoid sounding like an old man yelling at a cloud who is nostalgic for simpler times and keep the focus on my main claim: that the decline of thought is caused by efficiency as we outsource too much of our rationality to AI. Throughout the article, I will explore the different consequences we face when we are too reliant on AI to fix our problems and later offer ways to reorient back to your own cognition and think for yourself again.

Every time we outsource for efficiency’s sake has a cost

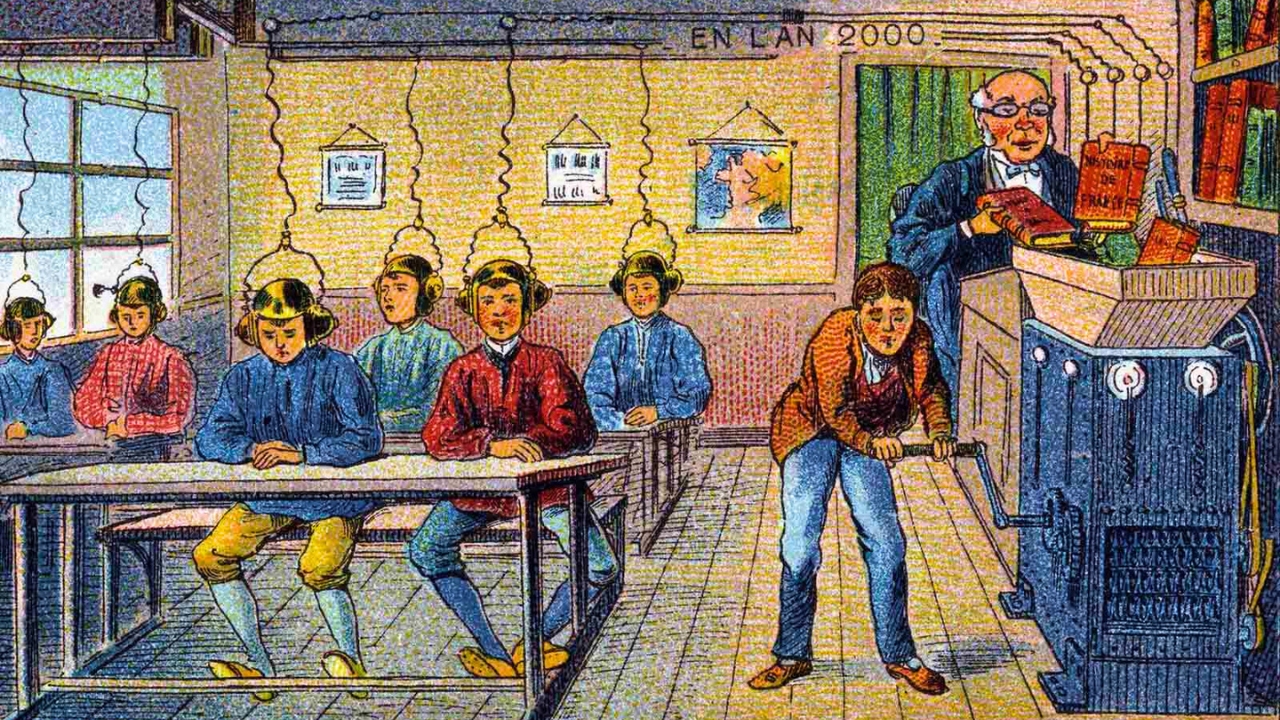

If we look back over the past few hundred years, whether it’s the industrial revolution or the invention of the Gutenberg printing press, there is a timeline that begins with a group of workers manually producing goods, until machines are invented to do the job much quicker, and at a fraction of the cost. With the former example, the innovation of factories removed the necessity of manual labour and the latter removed the need of scribes having to painstakingly copy text by hand, in favour of a device that could produce any book.

With these technological advances, although the benefits outweighed the negatives in the long term, there was no care or concern for the workers who no longer had a job once their use had expired. In other words, whenever there is an innovation, the promise of progress and efficiency inevitably overlooks the ethics of leaving the workers learned in the old way behind. We have seen this repeated in the past 50 years, particularly in the 1970s in the UK, when Margaret Thatcher’s policies closed down factories across the country and left many of the working class to fend for themselves, after having their livelihoods stripped from them.

Fast forward to today and we are seeing the same disregard for the worker. Not only are many jobs in danger of being replaced by AI (the institute for Public Policy Research estimates that AI could take away up to 8 million jobs in the UK alone), but the entire landscape of finding a new job has changed, with employers using AI to automatically sift through job applications to save time. [1]

These systems are called ATS (Applicant Tracking Systems) and, according to Capterra, an alarmingly high 75% of recruiters utilise ATS to manage applications. [2] Information pulled from Tulane university confirms that they “sort and rank candidates by conducting a scan of applicants to select those who best meet the job qualifications. By filtering out unqualified applicants, an ATS can help companies hire more efficiently”.

From the horse’s mouth then, we can see that efficiency is at the top of the hierarchy and there is no consideration if we are heading in the right direction from a humanitarian perspective. What makes this situation more absurd is that many applicants use AI themselves to write their CVs and cover letters for them. Reboot Online conducted a sample study of 100 of their recent applications and found that 61% of them were AI generated. [3] One tell-tale sign of a written piece being AI generated is a lack of personal anecdotes. It is this distinction which gives us hope that a world where a computer is checking another computer is not inevitable as there are clear insufficiencies in the emotional and personal department, and whether a company penalises or congratulates an applicant on the use of AI remains to be seen.

However, what is alarming with all of this is how willing we are to replace ourselves with a lack of consideration to whether this direction we are heading in is healthy. In my view, on an individual level, we are not thinking critically enough when we use AI to get information, taking it as truth without a second thought. As we have already shown on the macro level, efficiency is of paramount importance to progress and, when we have instant access to information whenever we want, critical thought goes out the window.

Instant access to any information is impairing our critical thinking skills

When the output is instantaneous, mistakes are overlooked.

Have you ever shared a post or a video you found interesting, only to later realise it was an AI creation? After realising you have been duped, you may be ashamed how you fell for such an obvious fake. It is the nature of quick information; it leaves no room for deliberation.

There is no deliberating, no battling with tough ideas, no context

When we chat with an AI chat bot, we are simulating what it’s like to speak through a problem with a human or even yourself. The difference between this and talking it through in real life is that there is no friction; the bot will hand the answer to you on a plate based on a premise you have fed the interface. Your premise may be faulty to begin with, but the AI will bypass this and give you an answer straight away.

When you receive an answer at this speed, your brain takes this as a comforting sign of certainty as there is no display of doubt. We are all susceptible to this and more-so now than ever, especially in the online world, which is becoming more tribal and binary, with us siding with a group who re-affirms your view as being the absolute truth. The voices we follow will likely be the ones who present their views as facts, leading to an unproductive and volatile environment where a middle ground between both sides is untenable.

This false sense of certainty is also prevalent in our real lives and is particularly dangerous when we are unsure of something. Say you ask a question to a friend that you haven’t got an answer to; if they respond quickly and matter-of-factly, you are likely to choose their response over your own response (or, rather, lack of one) as any decision is better than nothing, especially when it’s not charged with uncertainty. Uncertainty is uncomfortable and we will grasp at any opportunity to switch to a more certain and comforting outlook. This is why it is so dangerous to ask for advice when you haven’t got any idea of what to do yourself as you are likely to follow someone else’s fleshed-out path instead of doing the heavy lifting yourself.

Likewise, when we ask AI for advice, we need to be weary of giving too much credence to their response. We must keep in mind that AI tells you what you want to hear as it wants to keep the conversation going, primarily because it is still learning from you so the next person who asks can be given a better response. So it will keep asking more questions, questions you might not have expected to be wanting the answer to. What makes this even more dubious is that we tend to favour the responses which are more palatable, anything that affirms our beliefs and is, preferably, painless. And so, we cherry-pick the palatable information, disregarding the rest and AI is happy to oblige to keep us online. This means we are entering into a feedback loop which appears helpful and positive but is, in reality, damaging to us due to the lack of any hard truths and pushback.

This makes it is an imperfect system. Not only is it imperfect because of the lack of pushback, AI is also capable of providing wrong information. “AI hallucinations” is the term for this, which refers to incorrect or misleading results based on an incorrect model or premise, that the AI system presents as fact. This gets more likely when AI is trained on its own content as, without human content to feed off of, the picture becomes distorted, moving further away from reality until you’re left with an unintelligible mess.

The danger here is that we can lose sight of the objective reality. With efficiency being at the top of the hierarchy, we are prone to overlooking these errors and favour a false reality over our own perspective which is uncertain. As an example, if you are grappling with deciding what job you should go to next, it is your responsibility to change your underdeveloped perspective into a fleshed-out plan rather than being at the hands of an AI entity that doesn’t know you at all. Remember, you know yourself better than any artificial intelligence knows you, no matter how much information you feed it.

How can you return to thinking for yourself?

In order to reorient yourself back to thinking for yourself, belief in your own judgement must be unwavering. Being good at problem solving doesn’t happen overnight; it is cultivated through repeated attempts in a variety of stressful situations. The more times you go to AI for your problems, the more your own thinking skills will diminish. Deeply thinking through a problem brings the mind back into rhythm with itself. If you don't do this, you will lose the courage and initiative to explore the unknown and instead seek advice from an impersonal computer.

The best ideas take time to harvest.

Unlike AI’s response time, our solutions require slow thinking. It starts with you planting the seed and waiting for it to reveal itself to you after you have put in the time grappling with it. An example of a solution that requires slow thinking is deciding “how can I better handle stress?”. If you choose to go the AI route and ask a chatbot for suggestions to reduce stress, it can give you some useful responses, I can’t deny that. However, as explained, with no context to your quirks, you will always receive generalisations, an average that is taken from sifting through millions of user responses. It is precisely this exhaustive data size which gives us a false sense of certainty that ends up overpowering our own doubtful stance. Doubt cannot compete in a world of efficiency yet it is doubt which allows us to be fluid, cognisant of the other side’s opinions, and open to change. Without doubt, we will become more tribal, more stuck in our echo-chambers and less willing to come together with those whom we disagree with.

Moreover, when you choose AI over your own judgement, you are relinquishing your power in favour of a shortcut. More often than not, you pay the price for taking a shortcut and AI is not exempt from this. It may not seem so in the short term, but over time, the more you look up an answer to difficult questions, the harder it will be to do the work required on your own.

With these shortcuts, you can perceive yourself as an instant expert in any field (but you’re definitely not!)

This links with another danger, something which has been playing out in many social circles, whether it’s during an online debate with a stranger or in person with a friend. With AI at our fingertips, we are a click away from becoming a “specialist” in any topic. Although this has clear positives if the information is accurate and are using it to learn something new to fill in a gap in your knowledge. However, the issue comes when the information is incorrect and we do not do the necessary due-diligence of checking the validity of the source. Despite AI’s strengths, it is also capable of pulling information from incorrect sources. If we were looking at the websites ourselves, we might realise that it looks untrustworthy, but AI does not have the same scepticism as us due to its certain mind-set previously discussed.

With this certainty of AI’s information being a click away, it is easier than it has ever been to fake knowledge, with any and all information being easily obtainable. After a while, you may end up believing that the words you have found from somewhere else, whether it’s an expert or AI, are your own. This phenomenon has a name: cryptomnesia. Pulled from the definition, we may have a memory bias when recalling a thought which, if the memory has been forgotten and re-remembered, has the power to rewrite the truth as if it was a new idea. This recycling of an existing idea into a new one is never intentional. Using an example from the website Philosophy Terms, picture an inventor who comes up with a gadget design only to later discover a similar product in an old magazine at the dentist’s office. “This shows how the inventor’s unconscious mind held onto the concept without their awareness”. [4]

I would argue that we are all guilty of this in one way or another, and it is truer now than it has been in the past. With us being flooded with information at every angle, it is so easy to forget where your opinions came from until, without taking time to reflect, you are spouting a thought which is word- for-word what someone else has said.

In other words, with there being an infinite amount of information, it is so difficult to trace back whether someone has plagiarised another. It is this ease of getting away with it that makes it tempting to regurgitate another’s ideas as your own, because you get all of the praise with none of the work. Eventually you will start believing the compliments until, before you know it, you’re an expert (or so you think).

In conclusion, although AI can be a useful tool in moderation, there are many risks which seem to be ignored due to the speed of generated information, which can’t compete with our natural uncertainty. In favouring efficiency above all else and comparing a computer’s speed to our own mind, we are in danger of losing sight of the beauty found in doubting yourself. The truth is: the more you go to AI to solve personal problems which should only be solved yourself, the more overwhelmed you will be the next time a difficult problem comes your way.

Thanks for reading!

Brandon Bartlett,

Newel of Knowledge Writer

Sources:

[2] https://sopa.tulane.edu/blog/understanding-applicant-tracking-systems

[3] Reboot Online Study - https://www.thehrdirector.com/business-news/ai/61-recent-applicants-used-ai-write-cvs/

[4] https://philosophyterms.com/cryptomnesia/

[5] Cover Image - 33 Amazing Concepts About Future Transportation: Past & Present - http://www.ridebuzz.org/retro_future_transportation

other related newsletters

tHURSDAY'S THERAPY

Join 10,000+ improving their mental health & social skills 1 Thursday newsletter at a time

Happy to have you here!

try refreshing the page and trying again!

.png)

.png)

%20final%20final.png)

.png)

.png)

.png)

.png)